We’ve been talking about SKAN for a while now. However, it’s worth mentioning that SKAN isn’t the only game in town, which is one reason why mobile marketers have a tough time comparing performance metrics across different sources.

In today’s episode, Shamanth runs us through the different non-SKAN sources of measurement like Apple Search, Google UAC, Fingerprinting and web-to-app to name a few. He explains why it’s quite impossible to read the same data on these different sources – and what the best course of action is in situations like this.

***

Note: We have a growing community of mobile marketers!

The Mobile Growth Lab Slack: A community that was a part of our workshop series – The Mobile Growth Lab, is now open to the general public. Join over 200 mobile marketers to discuss challenges and share your expertise. More details are available here: https://mobileuseracquisitionshow.com/slack/

If you’re ready to join the growing community, fill this form: https://forms.gle/cRCYM4gT1tdXgg6u5

ABOUT ROCKETSHIP HQ: Website | LinkedIn | Twitter | YouTube

KEY HIGHLIGHTS

🥗 SKAN isn’t the essence of measurement

🍎 Apple Search

🧉 Google UAC

🎽 Relying on probabilistic matching

⚾️ Attribution on web-to-app

🎨 Different SKAN sources will have different results

FULL TRANSCRIPT BELOWApples, oranges and other fruits: the measurement mess with SKAN and non-SKAN conversion data on iOS

In a lot of discussions about measurement signal loss on iOS with ATT, there has been a lot of focus on SKAN – and rightfully so.

Yet one point that these discussions tend to miss is that SKAN is but one part of the picture – a big reason for the mess we are in on iOS is that SKAN is not the only measurement protocol out there. If all sources rigorously used SKAN, they would be easier to measure than they are now – but that is unfortunately not the case.

Let’s get into some of these complications, one source at a time.

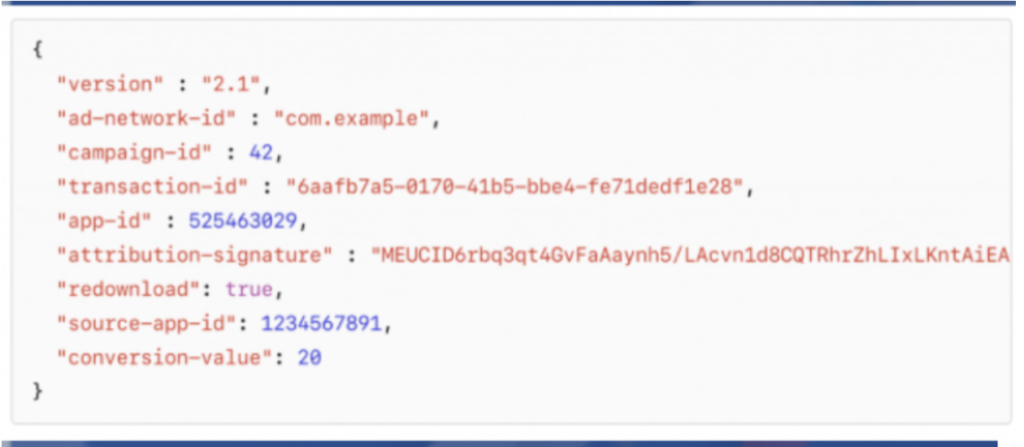

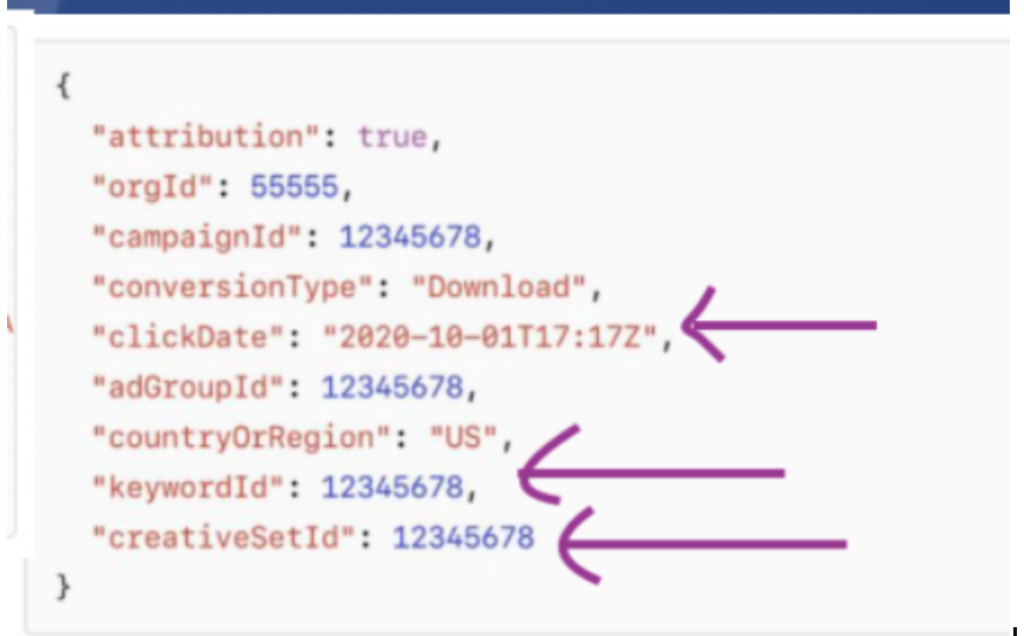

The first one is Apple Search, the elephant in the room. Apple Search attributes performance via what they call Apple Ad Services API. In the show notes, we’ve included a screenshot of the postback from Ad Services API alongside the postback from SKAN – and you’ll see that the postbacks are very different. Most significantly, the Ad Services API includes a click date, a keyword ID and a creative set ID – which allows Apple Search to attribute performance with much more granularity than SKAN does.

SKAN

Ad Services

**

Next in line – and the other elephant in the room, is Google UAC. Yeah, so many elephants – so little time. 🙂

Google does not use SKAN – and uses Firebase-tracked events for primary optimization and reporting. Google does have a SKAN report though – but this is buried in their reporting section, and hard to use and comprehend.

As significantly, when we compare metrics from the SKAN reporting within Google against Firebase as well as other sources, the SKAN reporting in Google looks massively off. Specifically we see ridiculously high CPAs in SKAN reporting in Google(over 20x that of what is reported by Firebase) – these just don’t line up with what our Media Mix Models or incrementality analyses say. So SKAN metrics within Google are practically useless.

As such we primarily rely on Google-reported(technically Firebase reported) metrics in the UAC dashboard.

**

Next up: ad networks that rely on probabilistic matching – or fingerprinting, if you will. Metrics from these channels are near-deterministic – as they are tracked by on-device characteristics. There is still some amount of signal loss – but you will see CPAs and ROAS numbers that are significantly better than what is reported by SKAN.

**

Web-to-app isn’t explicitly covered by SKAN. Technically, you place an attribution link on your website CTA that leads a user to the app stores – and the link attributes users via probabilistic matching. There’s some amount of signal loss here – but you’ll definitely see more signal than SKAN.

**

It’s also worth noting that even different sources that work with SKAN aren’t always directly comparable, unless their campaign architecture is exactly similar(which is almost never going to be the case).

What do we mean by that?

Apple allows a maximum of 100 campaigns in SKAN, but different networks define ‘campaign’ differently. Facebook, for instance, allows a maximum of 9 campaigns, each of which can have a maximum of 5 ad groups. TikTok allows a maximum of 11 campaigns with a maximum of 2 ad groups per campaign.

What is unclear is how Facebook and TikTok map their campaigns, ad sets and creatives to SKAdNetwork campaigns. What is likely is that Facebook and TikTok treat certain ad sets or certain creatives as a SKAN ‘campaign’ – so in effect these are phantom campaigns, even though the UI shows them as ‘ad groups’ or ‘ads’.

And because SKAdNetwork campaigns are also affected by privacy thresholds(a SKAN campaign with very few installs or conversions per day shows up as having conversion value of NULL rather than the actual conversion value), you might have 2 campaigns with the same CPA – but SKAN might show a lot of null conversions for one and actual conversions for another.

In short, you have to take an enormous pinch of salt while even comparing 2 sources that both have SKAN – and of course, it is much harder to compare a SKAN source and non-SKAN source.

In summary, measurement is a HUGE mess right now.

**

This of course is why we always recommend leaning on non-deterministic measurement to the extent possible – and at the very least using blended numbers as the source of truth.

A REQUEST BEFORE YOU GO

I have a very important favor to ask, which as those of you who know me know I don’t do often. If you get any pleasure or inspiration from this episode, could you PLEASE leave a review on your favorite podcasting platform – be it iTunes, Overcast, Spotify or wherever you get your podcast fix. This podcast is very much a labor of love – and each episode takes many many hours to put together. When you write a review, it will not only be a great deal of encouragement to us, but it will also support getting the word out about the Mobile User Acquisition Show.

Constructive criticism and suggestions for improvement are welcome, whether on podcasting platforms – or by email to shamanth at rocketshiphq.com. We read all reviews & I want to make this podcast better.

Thank you – and I look forward to seeing you with the next episode!